Generative AI is transforming the global news industry, offering both promise and peril. In Europe, where news and cultural content form a cornerstone of both democracy and the economy, policymakers face an urgent challenge. Can regulatory and licensing frameworks ensure that AI strengthens journalism rather than undermining it?

For many young people, AI is becoming the primary source of news. According to the Reuters Institute’s 2025 Digital News Report, about 15 per cent of people under 25 rely on those tools to stay informed. To be capable of doing so, AI models are trained from vast amounts of content online, much of which comes from the news publishers themselves.

A recent analysis by OtterlyAI reveals that 29 per cent of the sources ChatGPT cites originate from media outlets, while corporate websites lead the way at 36 per cent. For newsrooms, this is not just a statistic, but presents real consequences for the sector. Renate Schroeder, Director of the European Federation of Journalists (EFJ), explains AI as a rough challenge to “the economic survival of journalism when it comes to copyright and remuneration”.

The crawl dilemma

Faced with AI scraping, news publishers have adopted two main strategies: filing lawsuits or offering licensing agreements. In the United States, media giants such as the New York Times have taken OpenAI and Microsoft to court for using content without permission.

In Europe, licensing has become the pragmatic alternative. AFP partnered with French AI company Mistral, The Guardian and Le Monde reached agreements with OpenAI, and Axel Springer signed deals with both Microsoft and OpenAI.

You might be interested

Although these arrangements are a suitable solution to the problem, they do not include all players, leaving small and local publishers behind. “It’s the big media that is able to negotiate such agreements, whereas local and small media fall off,” Ms Schroeder notes. The biggest challenge is how to deal with the whole media sector, she added.

The role of EU regulation

Europe’s main regulatory response comes through the EU AI Act, which aims to ensure transparency and accountability for General-Purpose AI (GPAI) models. In particular, Article 53 requires AI providers to document how their models are trained, adopt copyright-compliance policies, and publish summaries of the content used in training.

Yet the EFJ Director sees gaps in practice. “We need mandatory transparency for the data used to train generated AI and explicit author consent,” she added. “It’s data grabbing in an unprecedented way, and we feel we have not been heard.” Ms Schroeder concerns reflect a broader view from Europe’s creative community.

We need mandatory transparency for the data used to train Gen AI and explicit author consent — Renate Schroeder, Director of the EFJ

In July, EFJ joined a coalition of dozens of associations representing authors, performers, and publishers to issue a joint statement criticising the Commission’s AI Act implementation measures and the GPAI Code of Practice.

“We strongly reject any claim that the Code of Practice strikes a fair and workable balance, or that the Template will deliver sufficient transparency about the majority of copyright works or other subject matter used to train GenAI models,” the statement reads. “This is simply untrue and a betrayal of the EU AI Act’s objectives.”

Ms Schroeder warns that current transparency obligations cover only a fraction of scraped content. Worse, companies can invoke trade secrets to bypass disclosure. “The Commission keeps talking about innovation and copyright regulation seems to come second,” she says.

The Commission keeps talking about innovation and copyright regulation seems to come second. — Renate Schroeder, Director of the EFJ

The Commission describes the AI Act as designed to help providers ensure transparency while integrating these models into products. The code’s transparency chapter provides a model documentation form to document training data in a single place, while the copyright chapter offers practical guidance for compliance with EU law.

AI summaries generate more economic pressure

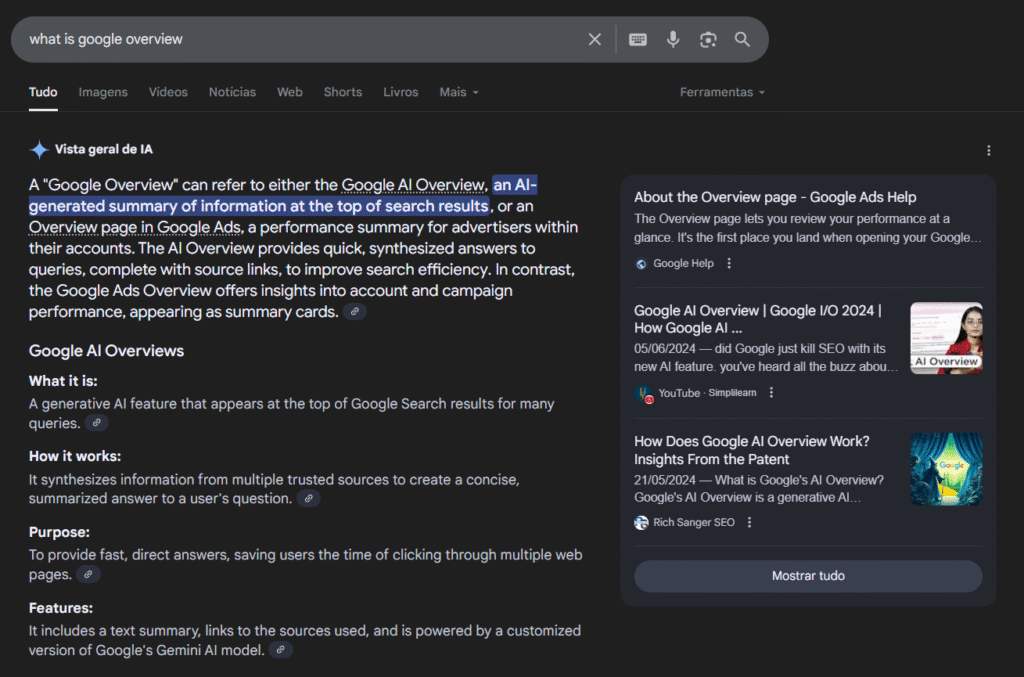

The rise of AI also has a direct financial impact. Similarweb data shows that organic traffic to news sites has dropped 26 per cent since Google launched AI Overviews. For the sector, this means less advertising income and subscription conversions. AI Overview is a new Google feature that generates AI-written summaries at the top of search engine results.

These changes in traffic lead to the bankruptcy of independent publishers, such as Turkey’s Gazete Duvar. Even major outlets registered significant drops in online users. A report published by the Wall Street Journal indicates that visitors number to many well-known news sites around the world is declining. Traffic from organic search to HuffPost’s desktop and mobile sites has more than halved in the last three years, while the Washington Post has seen a nearly identical decline.

Policy, trust and media freedom

Europe now stands at a crossroads. Generative AI is reshaping how news is produced, accessed and monetised, presenting both opportunities and risks. Schroeder points to the Nordic countries as a model for responsible integration.

In Sweden and Norway, public service broadcasters have developed AI tools specifically for small-language communities. The initiatives combine editorial oversight, ethical guidelines, and journalist training to ensure AI strengthens reporting rather than undermines it.

But the stakes go far beyond technology. Ms Schroeder warns that Europe’s response to AI will determine not just the economic survival of newsrooms, but also the public’s trust, media freedom, and the very resilience of democracy. “AI tools can be extremely useful, but they must never replace journalistic work. Human control is essential to maintain trust and verify facts.”